It’s not just Disney that has tiny robots capable of complex movements. Google DeepMind was published recently. A paper To discuss how deep reinforcement learning can be used to train low-cost miniature humanoid robots to learn complex movement skills. The London-headquartered research lab has been renamed Google DeepMind as part of a recent restructuring, which has merged the Google Brain team with the DeepMind team.

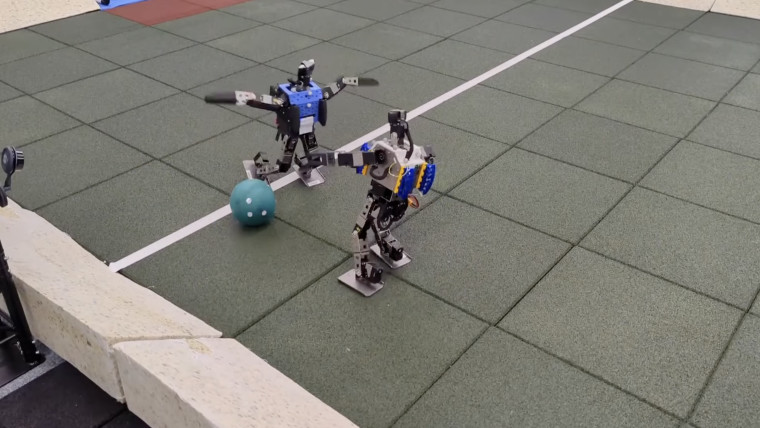

The researchers trained small humanoid robots to play a simple one-on-one soccer match on a 5m x 4m fixed-size court. For this purpose, they used the Robots OP3 platform with 20 controllable joints and various sensors. Their goal was to see if robots could “learn dynamic movement skills such as rapid fall recovery, walking, turning, kicking, and more.” The robots were first taught individual skills separately. In the simulation which are then merged end-to-end into a self-play setting.

In one of the videos created by the researchers, a robot is tasked with kicking a goal to see its “strength to push.” The robot falls to the ground and then comes back up on its own to score.

The researchers added that the robot players could automatically transition between these skills “in a smooth, stable and efficient manner – far beyond what is expected of a robot.” The robot also learned to predict ball movement and block opponent shots by developing a basic strategic understanding of the game. While their primary task was to score goals, experiments showed that they walked 156% faster, took 63% less time to get up, and kicked 24% faster compared to a scripted baseline.

Source: Deep Mind through Ars Technica